Why Ignoring Deepseek Will Cost You Sales

페이지 정보

Maritza Hallida… 작성일25-02-01 03:43본문

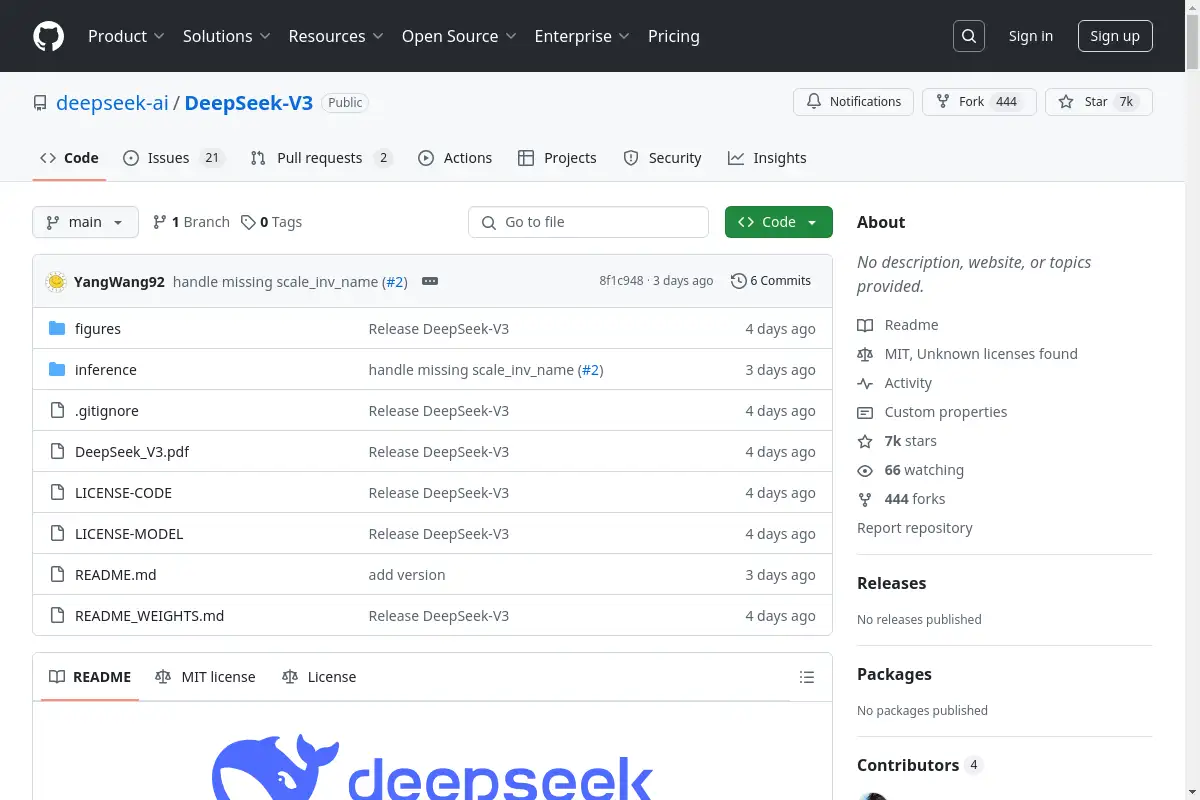

By open-sourcing its models, code, and information, DeepSeek LLM hopes to advertise widespread AI analysis and industrial purposes. Data Composition: Our coaching data comprises a various mix of Internet text, math, ديب سيك code, books, and self-collected data respecting robots.txt. They may inadvertently generate biased or discriminatory responses, reflecting the biases prevalent in the training information. Looks like we could see a reshape of AI tech in the coming 12 months. See how the successor both gets cheaper or faster (or both). We see that in definitely a number of our founders. We release the coaching loss curve and several other benchmark metrics curves, as detailed beneath. Based on our experimental observations, now we have found that enhancing benchmark efficiency utilizing multi-selection (MC) questions, equivalent to MMLU, CMMLU, and C-Eval, is a relatively simple job. Note: We evaluate chat models with 0-shot for MMLU, GSM8K, C-Eval, and CMMLU. We pre-educated DeepSeek language fashions on an enormous dataset of 2 trillion tokens, with a sequence length of 4096 and AdamW optimizer. The promise and edge of LLMs is the pre-skilled state - no want to gather and label information, spend time and money coaching own specialised fashions - just prompt the LLM. The accessibility of such advanced models may result in new applications and use circumstances throughout numerous industries.

By open-sourcing its models, code, and information, DeepSeek LLM hopes to advertise widespread AI analysis and industrial purposes. Data Composition: Our coaching data comprises a various mix of Internet text, math, ديب سيك code, books, and self-collected data respecting robots.txt. They may inadvertently generate biased or discriminatory responses, reflecting the biases prevalent in the training information. Looks like we could see a reshape of AI tech in the coming 12 months. See how the successor both gets cheaper or faster (or both). We see that in definitely a number of our founders. We release the coaching loss curve and several other benchmark metrics curves, as detailed beneath. Based on our experimental observations, now we have found that enhancing benchmark efficiency utilizing multi-selection (MC) questions, equivalent to MMLU, CMMLU, and C-Eval, is a relatively simple job. Note: We evaluate chat models with 0-shot for MMLU, GSM8K, C-Eval, and CMMLU. We pre-educated DeepSeek language fashions on an enormous dataset of 2 trillion tokens, with a sequence length of 4096 and AdamW optimizer. The promise and edge of LLMs is the pre-skilled state - no want to gather and label information, spend time and money coaching own specialised fashions - just prompt the LLM. The accessibility of such advanced models may result in new applications and use circumstances throughout numerous industries.

DeepSeek LLM series (together with Base and Chat) helps business use. The analysis neighborhood is granted access to the open-supply versions, DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat. CCNet. We significantly appreciate their selfless dedication to the analysis of AGI. The latest release of Llama 3.1 was reminiscent of many releases this 12 months. Implications for the AI landscape: DeepSeek-V2.5’s launch signifies a notable development in open-supply language fashions, probably reshaping the competitive dynamics in the field. It represents a major development in AI’s capability to grasp and visually represent complicated concepts, bridging the gap between textual directions and visual output. Their ability to be advantageous tuned with few examples to be specialised in narrows task is also fascinating (transfer learning). True, I´m responsible of mixing real LLMs with transfer studying. The learning fee begins with 2000 warmup steps, after which it's stepped to 31.6% of the utmost at 1.6 trillion tokens and 10% of the maximum at 1.Eight trillion tokens. LLama(Large Language Model Meta AI)3, the subsequent technology of Llama 2, Trained on 15T tokens (7x more than Llama 2) by Meta is available in two sizes, the 8b and 70b version.

DeepSeek LLM series (together with Base and Chat) helps business use. The analysis neighborhood is granted access to the open-supply versions, DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat. CCNet. We significantly appreciate their selfless dedication to the analysis of AGI. The latest release of Llama 3.1 was reminiscent of many releases this 12 months. Implications for the AI landscape: DeepSeek-V2.5’s launch signifies a notable development in open-supply language fashions, probably reshaping the competitive dynamics in the field. It represents a major development in AI’s capability to grasp and visually represent complicated concepts, bridging the gap between textual directions and visual output. Their ability to be advantageous tuned with few examples to be specialised in narrows task is also fascinating (transfer learning). True, I´m responsible of mixing real LLMs with transfer studying. The learning fee begins with 2000 warmup steps, after which it's stepped to 31.6% of the utmost at 1.6 trillion tokens and 10% of the maximum at 1.Eight trillion tokens. LLama(Large Language Model Meta AI)3, the subsequent technology of Llama 2, Trained on 15T tokens (7x more than Llama 2) by Meta is available in two sizes, the 8b and 70b version.

700bn parameter MOE-type model, in comparison with 405bn LLaMa3), and then they do two rounds of training to morph the mannequin and generate samples from coaching. To discuss, I have two guests from a podcast that has taught me a ton of engineering over the previous few months, Alessio Fanelli and Shawn Wang from the Latent Space podcast. A-slim-open models. DeepSeek LM fashions use the same architecture as LLaMA, an auto-regressive transformer decoder mannequin. The use of deepseek ai china LLM Base/Chat models is subject to the Model License. We use the prompt-degree unfastened metric to evaluate all fashions. The evaluation metric employed is akin to that of HumanEval. More evaluation particulars will be found within the Detailed Evaluation.

In the event you loved this informative article and you want to receive more details relating to deep seek please visit our site.

댓글목록

등록된 댓글이 없습니다.