8 Issues I Want I Knew About Deepseek

페이지 정보

Alex 작성일25-02-01 10:39본문

In a latest submit on the social network X by Maziyar Panahi, Principal AI/ML/Data Engineer at CNRS, the model was praised as "the world’s best open-supply LLM" in response to the DeepSeek team’s published benchmarks. AI observer Shin Megami Boson, a staunch critic of HyperWrite CEO Matt Shumer (whom he accused of fraud over the irreproducible benchmarks Shumer shared for Reflection 70B), posted a message on X stating he’d run a non-public benchmark imitating the Graduate-Level Google-Proof Q&A Benchmark (GPQA). The reward for DeepSeek-V2.5 follows a nonetheless ongoing controversy round HyperWrite’s Reflection 70B, which co-founder and CEO Matt Shumer claimed on September 5 was the "the world’s prime open-supply AI model," in keeping with his inside benchmarks, solely to see these claims challenged by unbiased researchers and the wider AI research community, who've to date didn't reproduce the acknowledged results. Open source and free deepseek for research and business use. The DeepSeek model license allows for industrial usage of the expertise below specific circumstances. This implies you can use the know-how in business contexts, together with promoting providers that use the model (e.g., software-as-a-service). This achievement considerably bridges the efficiency hole between open-supply and closed-source fashions, setting a brand new normal for what open-source fashions can accomplish in difficult domains.

Made in China will probably be a thing for AI fashions, similar as electric automobiles, drones, and other technologies… I don't pretend to grasp the complexities of the models and the relationships they're trained to form, but the fact that highly effective models can be trained for an inexpensive quantity (in comparison with OpenAI raising 6.6 billion dollars to do a few of the identical work) is fascinating. Businesses can combine the mannequin into their workflows for numerous duties, starting from automated buyer assist and content technology to software program growth and knowledge analysis. The model’s open-supply nature also opens doorways for additional research and development. In the future, we plan to strategically put money into research throughout the next instructions. CodeGemma is a set of compact fashions specialized in coding tasks, from code completion and era to understanding natural language, solving math problems, and following directions. DeepSeek-V2.5 excels in a variety of essential benchmarks, demonstrating its superiority in each natural language processing (NLP) and coding tasks. This new launch, issued September 6, 2024, combines both normal language processing and coding functionalities into one powerful model. As such, there already seems to be a brand new open source AI model leader just days after the final one was claimed.

Made in China will probably be a thing for AI fashions, similar as electric automobiles, drones, and other technologies… I don't pretend to grasp the complexities of the models and the relationships they're trained to form, but the fact that highly effective models can be trained for an inexpensive quantity (in comparison with OpenAI raising 6.6 billion dollars to do a few of the identical work) is fascinating. Businesses can combine the mannequin into their workflows for numerous duties, starting from automated buyer assist and content technology to software program growth and knowledge analysis. The model’s open-supply nature also opens doorways for additional research and development. In the future, we plan to strategically put money into research throughout the next instructions. CodeGemma is a set of compact fashions specialized in coding tasks, from code completion and era to understanding natural language, solving math problems, and following directions. DeepSeek-V2.5 excels in a variety of essential benchmarks, demonstrating its superiority in each natural language processing (NLP) and coding tasks. This new launch, issued September 6, 2024, combines both normal language processing and coding functionalities into one powerful model. As such, there already seems to be a brand new open source AI model leader just days after the final one was claimed.

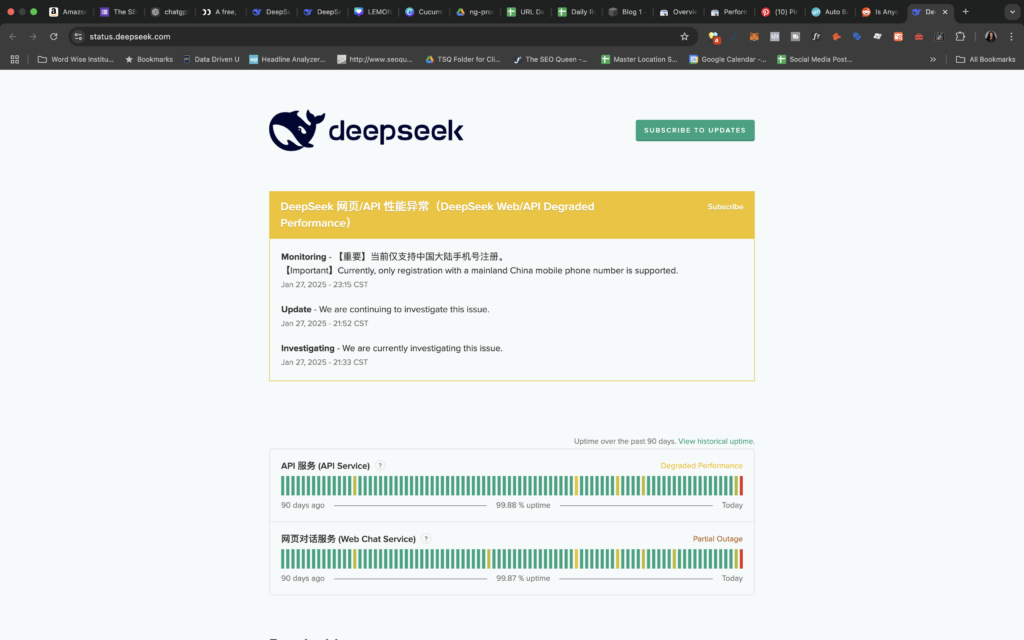

Available now on Hugging Face, the model provides customers seamless entry via web and API, and it appears to be essentially the most superior giant language mannequin (LLMs) at present available in the open-source panorama, according to observations and checks from third-celebration researchers. Some sceptics, nonetheless, have challenged DeepSeek’sy user may use it only 50 occasions a day. As for Chinese benchmarks, aside from CMMLU, a Chinese multi-topic a number of-alternative task, DeepSeek-V3-Base also reveals better performance than Qwen2.5 72B. (3) Compared with LLaMA-3.1 405B Base, the largest open-supply model with eleven instances the activated parameters, deepseek ai china-V3-Base also exhibits much better efficiency on multilingual, code, and math benchmarks.

If you liked this posting and you would like to acquire far more information regarding deep seek kindly pay a visit to our own web-site.

댓글목록

등록된 댓글이 없습니다.