It' Arduous Sufficient To Do Push Ups - It's Even More durab…

페이지 정보

Hai 작성일25-02-01 11:19본문

These are a set of personal notes in regards to the deepseek core readings (extended) (elab). Firstly, with a view to accelerate mannequin training, nearly all of core computation kernels, i.e., GEMM operations, are carried out in FP8 precision. As illustrated in Figure 7 (a), (1) for activations, we group and scale components on a 1x128 tile basis (i.e., per token per 128 channels); and (2) for weights, we group and scale components on a 128x128 block basis (i.e., per 128 input channels per 128 output channels). We attribute the feasibility of this strategy to our fine-grained quantization technique, i.e., tile and block-clever scaling. With the DualPipe technique, we deploy the shallowest layers (together with the embedding layer) and deepest layers (together with the output head) of the mannequin on the identical PP rank. An analytical ClickHouse database tied to DeepSeek, "utterly open and unauthenticated," contained greater than 1 million cases of "chat history, backend knowledge, and delicate info, including log streams, API secrets and techniques, and operational particulars," based on Wiz. DeepSeek's first-generation of reasoning models with comparable performance to OpenAI-o1, together with six dense fashions distilled from DeepSeek-R1 based mostly on Llama and Qwen. We further conduct supervised high-quality-tuning (SFT) and Direct Preference Optimization (DPO) on DeepSeek LLM Base fashions, ensuing in the creation of DeepSeek Chat fashions.

These are a set of personal notes in regards to the deepseek core readings (extended) (elab). Firstly, with a view to accelerate mannequin training, nearly all of core computation kernels, i.e., GEMM operations, are carried out in FP8 precision. As illustrated in Figure 7 (a), (1) for activations, we group and scale components on a 1x128 tile basis (i.e., per token per 128 channels); and (2) for weights, we group and scale components on a 128x128 block basis (i.e., per 128 input channels per 128 output channels). We attribute the feasibility of this strategy to our fine-grained quantization technique, i.e., tile and block-clever scaling. With the DualPipe technique, we deploy the shallowest layers (together with the embedding layer) and deepest layers (together with the output head) of the mannequin on the identical PP rank. An analytical ClickHouse database tied to DeepSeek, "utterly open and unauthenticated," contained greater than 1 million cases of "chat history, backend knowledge, and delicate info, including log streams, API secrets and techniques, and operational particulars," based on Wiz. DeepSeek's first-generation of reasoning models with comparable performance to OpenAI-o1, together with six dense fashions distilled from DeepSeek-R1 based mostly on Llama and Qwen. We further conduct supervised high-quality-tuning (SFT) and Direct Preference Optimization (DPO) on DeepSeek LLM Base fashions, ensuing in the creation of DeepSeek Chat fashions.

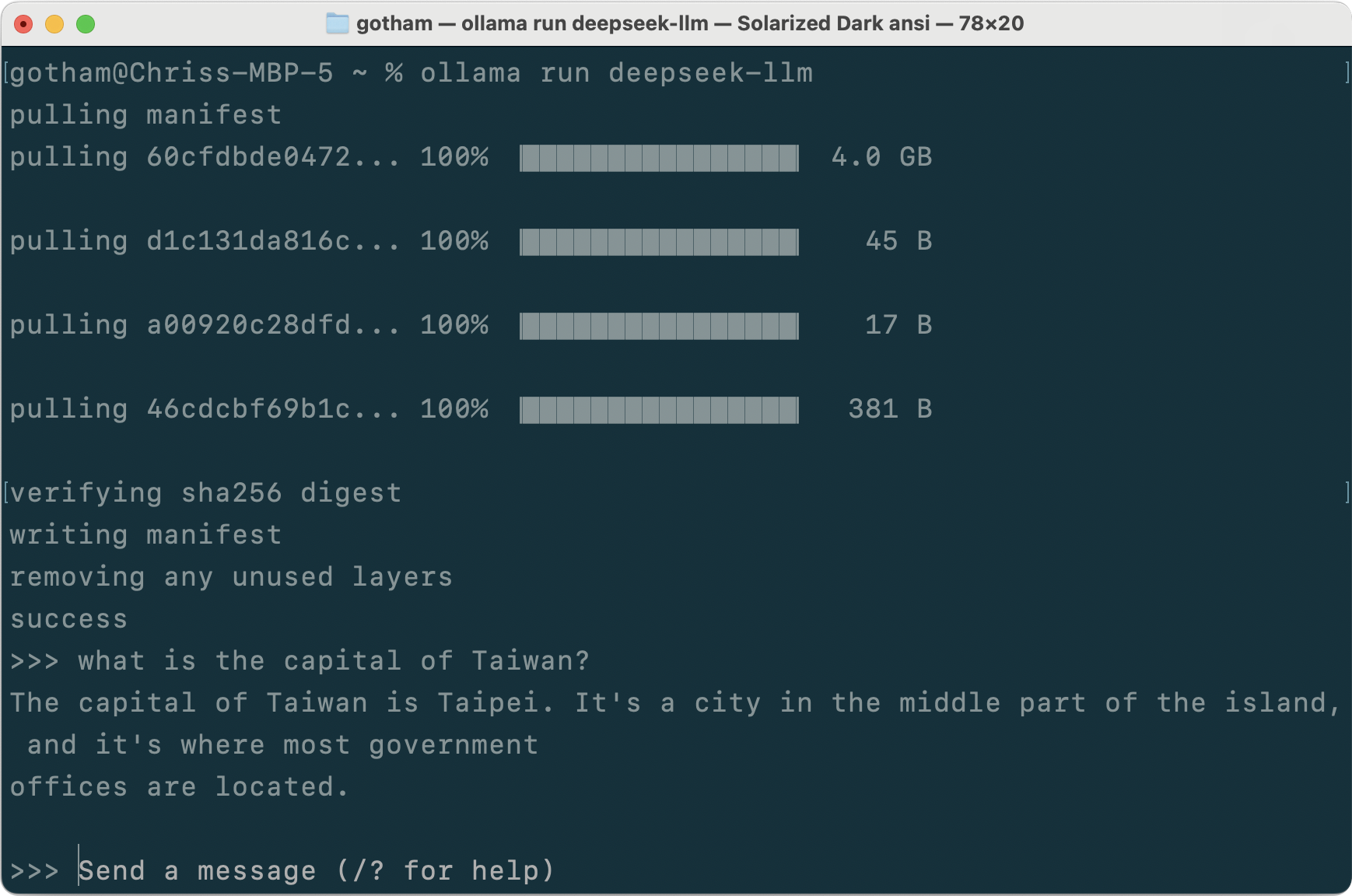

After it has finished downloading you must end up with a chat prompt when you run this command. Often, I find myself prompting Claude like I’d immediate an incredibly excessive-context, affected person, not possible-to-offend colleague - in different words, I’m blunt, short, and converse in loads of shorthand. Why this matters - signs of success: Stuff like Fire-Flyer 2 is a symptom of a startup that has been constructing subtle infrastructure and coaching models for a few years. Following this, we perform reasoning-oriented RL like DeepSeek-R1-Zero. To solve this, we suggest a effective-grained quantization technique that applies scaling at a extra granular level. Notably, compared with the BF16 baseline, the relative loss error of our FP8-coaching model remains persistently below 0.25%, a degree properly throughout the acceptable vary of coaching randomness. A number of years in the past, getting AI methods to do useful stuff took a huge quantity of careful considering as well as familiarity with the establishing and upkeep of an AI developer environment. Assuming the rental price of the H800 GPU is $2 per GPU hour, our complete coaching costs quantity to solely $5.576M. At the small scale, we practice a baseline MoE mannequin comprising approximately 16B whole parameters on 1.33T tokens.

The EMA parameters are stored in CPU memory and are up to date asynchronously after each training step. This methodology permits us to take care of EMA parameters with out incurring extra reminiscence or time overhead. In this fashion, communications through IB and NVLink are totally overlapp2024a). We hope our design can serve as a reference for future work to keep pace with the latest GPU architectures. Low-precision GEMM operations typically suffer from underflow issues, and their accuracy largely depends on excessive-precision accumulation, which is often performed in an FP32 precision (Kalamkar et al., 2019; Narang et al., 2017). However, we observe that the accumulation precision of FP8 GEMM on NVIDIA H800 GPUs is proscribed to retaining round 14 bits, which is considerably lower than FP32 accumulation precision.

댓글목록

등록된 댓글이 없습니다.